Prove the value of AI at scale

To sustain AI investment and expand its scope, you need to move beyond cost-cutting narratives and build a case for business impact. You need a new economic model; one that redefines success, blends old budget lines, and links performance directly to business outcomes.

When done right, AI goes far beyond improving support efficiency. It rewires the financial model: breaking the link between support costs and revenue growth, and turning support into a contributor to customer activation, retention, and lifetime value.

Stop measuring AI like software and thinking about ROI in isolation. Start treating your AI Agent as a new workforce capability that changes how your support function creates and captures value.

In this section, we'll explore how to redefine value in an AI-first model, evolve performance measurement, tie support directly to business outcomes, blend budgets for your new operating model, and reinvest savings for long-term gain.

Reframe how you define value

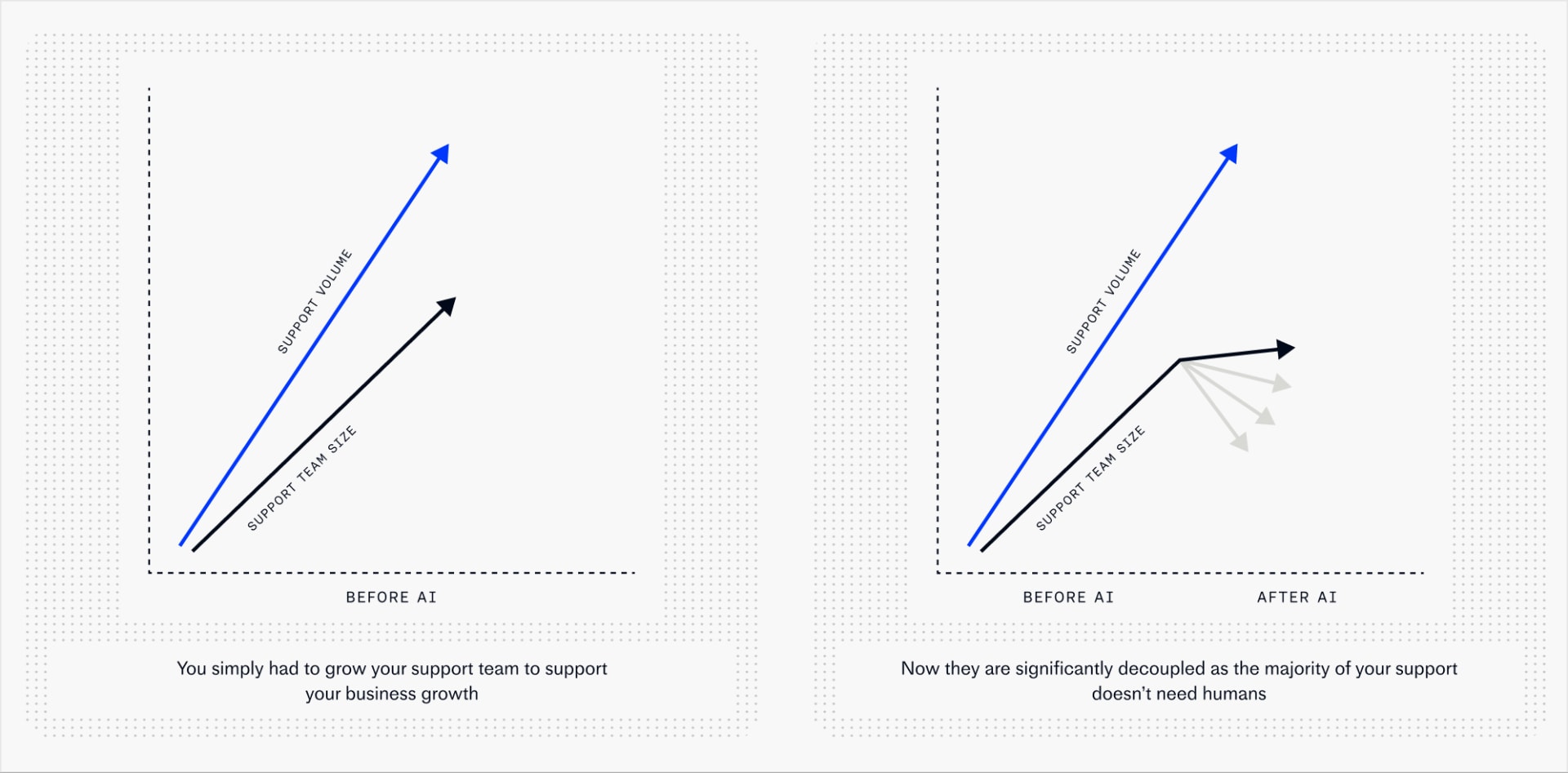

Traditional support economics are built around linear growth: more tickets means more headcount, more outsourcing, more software. Value was measured by containment: deflections, handle times, tickets closed.

Here's what value looks like in an AI-first model:

-

Human productivity: Your team focuses on more strategic areas, not the queue.

-

System improvement: Every resolved query makes the system smarter.

-

Revenue influence: Support becomes a lever for activation, retention, and growth.

-

Organizational agility: You scale service without scaling headcount.

And here's how that transformation looks visually:

Support costs and revenue grow in lockstep. Support headcount rises as the business grows. Value is hard to attribute.

Revenue continues to climb. Support costs flatten, grow slowly, or decline. Support starts influencing revenue through better CX and faster time-to-value.

This is what it means to decouple cost from growth (and what every CFO wants to see).

But that curve doesn't bend on day one. The early stages often look like additive spend because you're funding a new workforce layer while your old one is still intact. The real gains come gradually through attrition (not backfilling open roles), pausing BPO expansion, slowing down hiring plans, and the compounding effect of automation.

See Chapter 2: Launch it for more detail on how automation rate influences time-to-ROI.)

Evolve your performance metrics

1. Measuring human team performance

Metrics like average handle time (AHT), cases handled, and first contact resolution (FCR) defined what "good" performance looked like when human agents managed the majority of support queries and success meant moving quickly through high volumes. But they no longer reflect the value of your team's work.

In many cases, they create the wrong incentives: pushing speed over quality, or signaling failure when agents are actually solving more difficult problems. Here's why they don't work:

Average Handle Time (AHT)

Measures how long an agent spends on each conversation

AHT rises, for good reason.

When AI handles the majority of queries, human agents are left with the edge-case, sensitive, or emotionally charged issues. Their AHT naturally rises, but that's a sign of impact, not inefficiency.

Cases handled

Total cases, tickets, or conversations resolved

Cases handled ≠ productivity.

Legacy productivity models are still built on volume-first logic: more cases closed = more value delivered. In an AI-first world, that logic breaks down.

Human agents are now handling the edge cases: the issues that take 5-10x the effort of a standard support query. Many are also shifting focus to other types of work, like training the AI Agent, improving content, and analyzing performance. You'll likely need a period of re-benchmarking productivity, especially as team roles evolve, AI takes on more volume, and human work becomes more strategic.

First contact resolution (FCR)

Measures how often a query is resolved in the first interaction

FCR drops, but reflects complexity, not failure.

AI on the frontlines means one-touch queries aren't reaching your team. Measuring their success through FCR doesn't work in this model.

You don't need to throw out these traditional metrics entirely, but they become less relevant once you've deployed an AI Agent. Instead, you need to focus on:

-

Where human effort is going.

-

What value it's driving.

-

How humans are improving the system as a whole.

Your team's responsibilities are changing, and your metrics need to evolve with them. That means shifting away from blunt metrics that reward speed and volume, and toward metrics that reflect value:

Human involvement rate

How often do humans need to step in, and are those interventions high-value?

- % of total conversations handled by a human.

- % of conversations handed off by AI.

- Trendline of human involvement over time.

Resolution of handover queries

Are humans completely resolving the questions that are handed over?

- % of conversations handed off to human agents resolved without repeat contact.

- Time to resolution for conversations handed off to human agents.

- Customer sentiment on handoff cases.

Value-adding activities

How much of your team's effort is now focused on work tied to retention, growth, or product insights? Are human-led conversations driving meaningful customer impact?

- % of conversations tied to onboarding, expansion, or retention goals.

- Feature activation or product adoption tied to human support.

- Volume and impact of product feedback submitted by support.

- Volume of proactive, consultative engagements.

Skill evolution

Are agents developing the skills needed in an AI-first organization, like consultative support, content creation, and knowledge management?

- AI fluency level (training completion, practical application).

- Contribution to systems improvement (playbooks, workflows).

- Internal promotions into new AI-era roles.

Quality of human-AI collaboration

How effectively are human agents influencing AI performance through feedback and knowledge improvement?

- % of team time spent on "out-of-inbox" improvement activities (content, QA, training).

- Quality/impact of suggested content (based on resolution uplift).

Instead of asking, "How many cases did we close?"

Ask:

-

How are we helping customers get to value faster? E.g., what is it worth to unblock a customer from using your product instantly, in terms of retention or expansion?

-

How are we shaping and strengthening AI performance? E.g., are human agents improving Fin's resolution quality, coverage, or confidence?

-

How are we improving the system overall? E.g., through better content, workflows, and journey design?

2. Measuring AI Agent performance

In an AI-first model, your AI Agent is your front line. It needs to be evaluated with the same rigor, ownership, and outcome-focus you apply to human teams.

These are the core metrics that matter when measuring AI Agent performance at scale:

Resolution rate

% of conversations fully resolved by the AI Agent without human intervention

Resolution, not deflection, is the true measure of value. This tells you whether the AI Agent is actually solving problems, not just containing volume.

≥75% resolution (across tasks, queries, multi-turn flows)

Involvement rate

% of inbound support volume that the AI Agent is involved in, regardless of outcome

This shows the AI Agent's footprint. It reveals whether it's catching enough of your volume to make an impact.

≥80% AI involvement

Automation rate

Resolution × Involvement = Automation %

This demonstrates the AI Agent's overall impact across channels.

≥60%

These metrics work together. Resolution tells you if it's working. Involvement shows where it's working. Automation reveals how much work it's doing.

Now, you need to measure how customers feel about it.

3. Measuring the customer experience

Teams often rely on metrics like CSAT and deflection rate to measure the customer experience, but neither offers a reliable read on what customers are actually experiencing.

CSAT only covers a small fraction of conversations (often less than 10%) and tends to capture extremes. Deflection rate assumes that if a query didn't reach a human, it must have been resolved. But that's a risky assumption. These metrics miss context and nuance. They make it hard for teams to know what to fix next, or whether anything's actually improving.

At its core, assessing the customer experience comes down to two things:

-

Did the customer get the help they needed?

-

How did they feel about the experience?

These questions aren't new, but until recently, support teams didn't have reliable ways to answer them.

Here's how to shift your measurement model to get a true read on the customer experience.

Focus less on deflection, and more on resolution

Traditionally, deflection rate focused purely on whether or not queries reached a human agent. It was used as a proxy for success, assuming that if a customer didn't reach a human, their issue was resolved. But that's not always true.

In the AI era, deflection alone doesn't tell you enough. While helpful as an early signal, it's a limited, and potentially misleading, measure of success. What matters is whether the issue was resolved, regardless of who handled it.

With AI now capable of resolving the majority of queries, we need to shift our focus from deflection to resolution.

Here's what you need to do:

Change what you report on

If your dashboards prioritize deflection rate, update them. Focus on resolution rate instead, and track metrics like:

-

% of conversations resolved end-to-end by AI.

-

% of conversations resolved without repeat contact.

-

% of handoffs that were avoidable (i.e. the AI could have resolved, but didn't).

Update your targets

Set goals around resolution rate, not just deflection.

Use unresolved queries to iterate and improve

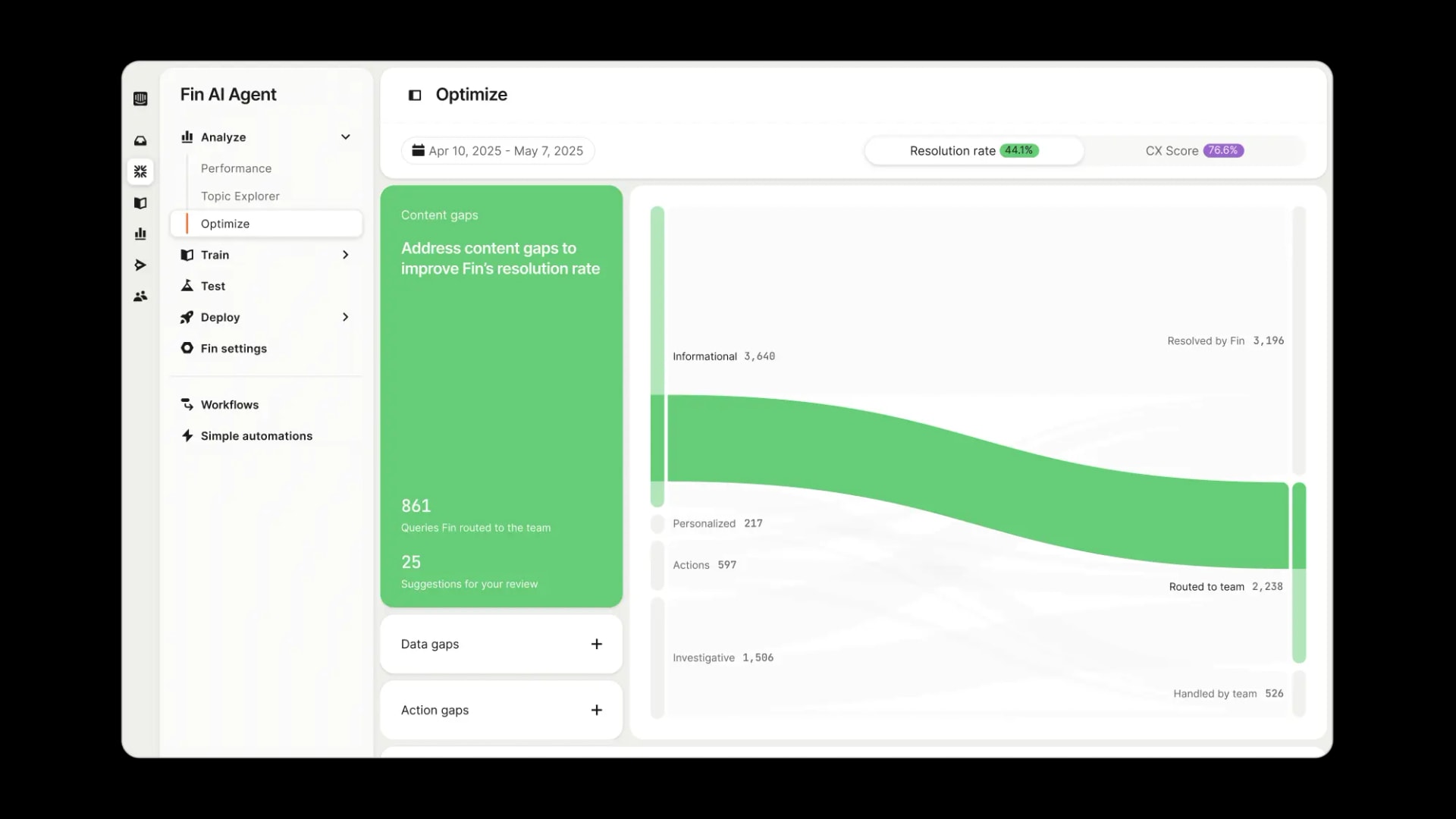

Resolution rate tells you what's working. But what's unresolved tells you where to improve. Look at what AI isn't resolving, and why. Is the content unclear? Is it a configuration issue? Use this to continuously improve the system.

Fin surfaces recommended fixes right alongside these breakdowns – editing content, refining a prompt, or adding a missing step. This creates a feedback loop built directly into the system:

Deflection tells you what didn't reach your team. Resolution tells you what's actually working, and what to fix next.

If you want to scale AI successfully, you need to understand what AI is (and isn't) resolving, and how the customer feels about it.

In the next section, we'll unpack how to measure customer experience at scale.

Measure customer sentiment and satisfaction across all conversations with AI

Instead of relying on <10% CSAT survey coverage, you can use AI to analyze 100% of customer conversations. This level of insight means you can understand how customers actually feel and turn gaps into opportunities:

-

Real-time problem detection replaces quarterly survey analysis.

-

Proactive support replaces reactive problem-solving.

-

Support data becomes an instant source of intelligence that drives continuous improvement.

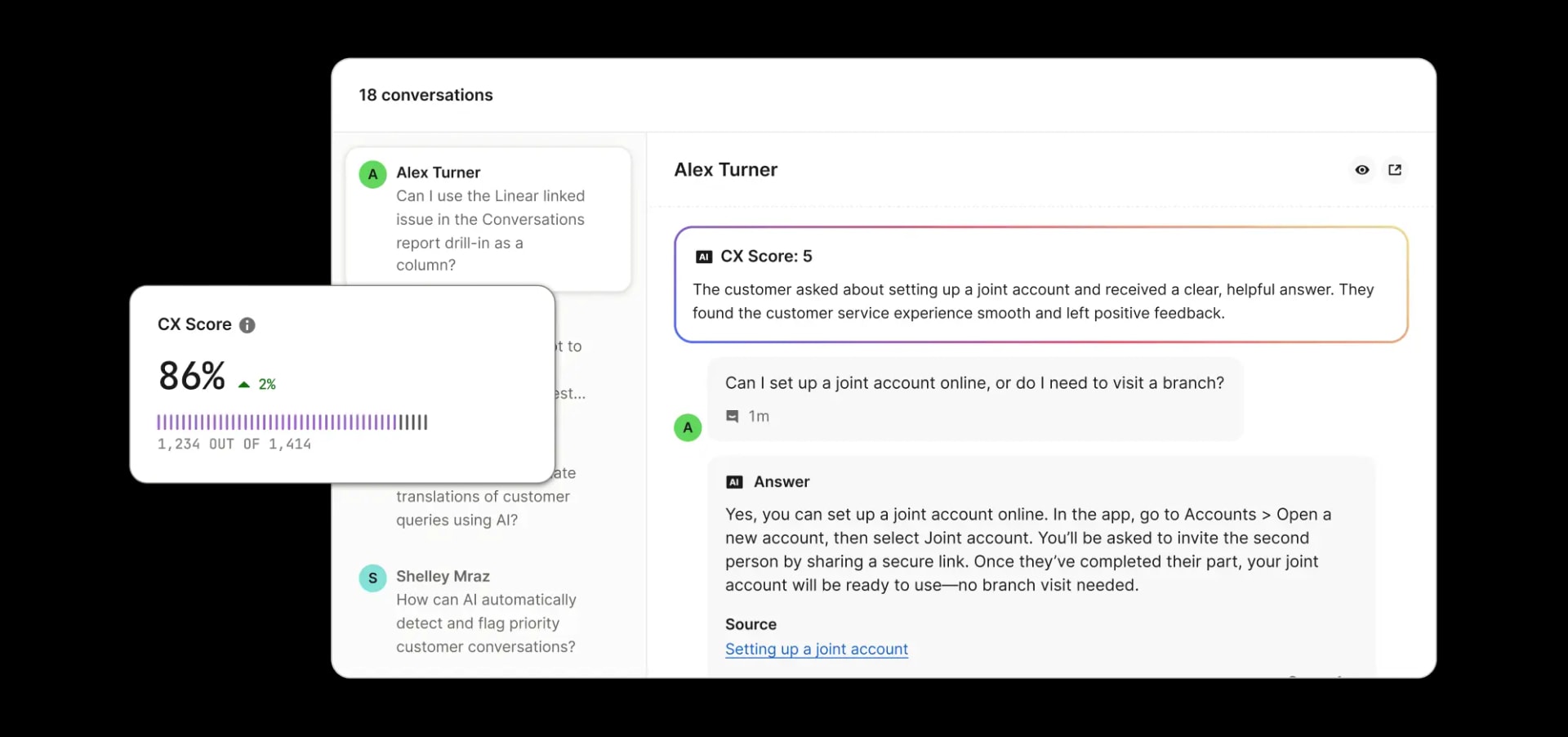

There are a number of ways to approach this, but here's an example of how Fin surfaces insights from conversations. It evaluates every conversation across resolution status, customer sentiment, and service quality, generating a CX rating from 1–5 for each conversation.

CX Score Reasons take this further by explaining what drove each rating. This means you're not just seeing that a conversation scored poorly, but rather getting insight into exactly why. These individual ratings contribute to a broader Customer Experience Score, with full filtering and segmentation capabilities so you can identify patterns and actively prioritize improvements.

Tie support performance directly to business outcomes

In the early phases of AI adoption, success is measured through hours saved, headcount avoided, CSAT maintained. But at scale, leadership needs to understand the broader value story: how support performance connects to retention, conversion, and revenue growth.

This means modeling not just cost reduction, but business impact over time:

-

Cost reduction

-

Revenue influence

-

Churn prevention

-

Product feedback loop

The most advanced teams go a step further: they directly attribute business value to support data. They track product usage lift after successful resolutions. They measure feature adoption linked to consultative support.

In this model, support becomes a real lever for growth. Not a cost center. A value driver.

Capture real ROI by blending budgets

In a traditional model, support spend is siloed. You fund people, tools, and services separately, and scale them linearly with support volume. When volume goes up, you hire. You expand BPO contracts. You add software to help the team move faster.

AI Agents break that model. They decouple headcount from volume. And that shift should be reflected in how you model cost and capture value.

AI Agents introduce flexibility. They allow you to break down the budget categories that have historically constrained how you invest in customer experience. When a single AI Agent can take on work previously spread across in-house agents, BPOs, and point solutions, your job isn't just to count the savings. It's to reallocate them strategically.

Here's how to capture the value:

-

Slow hiring plans When your AI Agent resolves the majority of Tier 1 conversations, you don't need to backfill every open role or scale hiring as volume grows.

-

Reduce BPO spend The most direct cost trade-off is against outsourced headcount. If your AI Agent takes 60% of your volume, renegotiate your contract accordingly.

-

Reassign internal headcount Move experienced agents into system roles (AI ops, content design, QA) rather than expanding the frontline.

-

Shift budget lines Move spend from services or BPO to tech. Fund AI infrastructure that reduces future services spend. Reinvest AI-driven savings into customer experience design and innovation.

If you treat your AI Agent as "just software," these decisions don't happen. But if you recognize it as a new workforce capability, it becomes clear: your budget should follow your operating model.

Blending budgets doesn't mean cutting. It means realigning spend to match your operating model. You need to shift your thinking from "cost savings" to "budget reallocation."

Reinvest for compounding gains

Every hour your AI Agent saves, every role you no longer need to backfill, every BPO contract you downsize: these are dividends that can be reinvested for long-term growth.

You can use these dividends to reinvest in:

-

Innovative CX design Use the capacity AI creates to reimagine support journeys, craft more proactive engagement, and test new service models.

-

System improvement Scale your knowledge management function. Assign more team members to content refinement, training data, and AI tuning.

-

New roles and responsibilities Invest in AI ops leads, conversation designers, and QA specialists who can improve AI performance and experience quality at scale.

-

Future-proofing your support organization Invest in the training and enablement you need to build the muscle for sustained AI-led service.

This is what scaling AI actually looks like:

-

Support becomes a system.

-

AI becomes infrastructure.

-

Capacity becomes currency that can be redeployed to drive growth.

Scale is the next frontier

You've proven that AI works. You've seen the early wins. But at scale, something bigger happens. AI stops being a tool and starts becoming infrastructure. Your customer experience gets faster, smarter, and more personalized. Your team evolves into system designers and strategic advisors. Support becomes a true lever for growth, not a cost center.

This is a chance to lead the way for your company, not just your team.

Here's what you're building toward:

-

AI Agents resolve 80%+ of inbound volume They don't just handle the simple stuff. They resolve complex, multi-step issues end-to-end, across channels. They interpret nuance, manage real-time data, and initiate backend actions.

-

Human teams focus on system design, feedback, and strategy – not volume The role of the human team evolves. They're no longer measured by how many tickets they close, but by how effectively they improve the systems behind the scenes. They're still engaging with customers as needed, but their primary focus is training the AI Agent, refining the content, analyzing failure patterns, and shaping how support works at scale. Their job is not to react, it's to design.

-

Organizational structure reflects new roles and workflows Traditional team structures optimized for queue handling don't fit this model. New roles emerge. Workflows shift from ticket-based triage to continuous system improvement. Team rituals, reviews, and KPIs are reoriented around quality, collaboration, and iteration.

-

Customer experience becomes a product: designed, measured, and iterated Support is no longer a reactive function. It's a designed experience, just like your product. Every interaction can be shaped, tested, and refined. The handoffs between AI and humans feel seamless. The tone matches your brand. The experience improves with every conversation. It's support as product, not just service.

-

Support drives business outcomes, not just resolutions The value of support isn't measured by case count. It's measured by customer activation, retention, satisfaction, and expansion, enabled by AI. Support becomes a lever for growth, and a strategic input into product, marketing, and revenue.

Not every team will get there. But you can. With the right systems, roles, and mindset, your AI Agent doesn't just support customers. It becomes the foundation for a smarter, faster, more resilient business.

For support leaders, this is a career-defining opportunity. The chance to pioneer a new model. To show what's possible. To build something the rest of the business follows.

Because this future is inevitable. We're moving closer towards a single, unified Customer Agent that works across the customer journey to deliver a seamless customer experience. You're primed to lead the way. Lean into this moment. The boldest customer service leaders are the ones who will shape what comes next.