At Intercom, we’ve built Fin, an AI-powered support bot designed to understand users’ issues and answer their questions accurately. To do this, Fin relies on state-of-the-art large language models (LLMs).

However, even the most advanced LLMs have a limitation: they don’t always have up-to-date knowledge about the world or the product the user is having problems with. That’s where Retrieval Augmented Generation (RAG) comes in. Like many tools in this space, Fin uses RAG to dynamically retrieve and incorporate relevant information at runtime.

The RAG pipeline has three key stages:

- Retrieval: We fetch 40 potentially relevant documents from the knowledge base. This stage prioritises speed and scalability over accuracy. (See diagram below to get a high level idea).

- Reranking: These documents are re-ordered by relevance using a more sophisticated model, and a smaller subset (say top 5 to 10) is passed forward.

- Answer Generation: Finally, the LLM uses these top documents, along with contextual information (like user data or timestamps), to generate a precise answer.

In this blog, we describe how we replaced a general-purpose retrieval model with the one fine-tuned on our high-quality customer support specific data, and the results of doing so.

How does a retrieval model work?

Fin’s retrieval system is powered entirely by semantic search1. In semantic search, each document2 is compressed into a vector (also known as an embedding: a dense numerical representation) that captures its meaning. These embeddings are generated when you onboard a new app into Fin or update your knowledge base. When a user submits a query, we generate a similar vector representation of that question and compare it to the stored document vectors. The search then returns the top 40 documents with the highest similarity to the query vector.

If you browse the Hugging Face model hub for sentence similarity, you’ll find over 12,000 open-weight models. What differentiates them is their ability to understand both the query, and the documents and to generate embeddings that reflect their meaning in a way that semantic search can leverage. One common and popular way to evaluate these models is the Massive Text Embedding Benchmark (MTEB), which ranks embedding models across a broad set of tasks.

About a year ago, we adopted bge-large-en-v1.5 for English content and multilingual-e5-base for multilingual content. Since then, new models have been released almost monthly, each claiming to outperform the last on the MTEB leaderboard. But we can’t just hop on to the latest best model every month: evaluating and switching models at that pace is costly. Each change would require recomputing embeddings for over 300 million documents which is expensive to compute and time-consuming to A/B test in production. In our constant push to make Fin be the best it can, we recently decided to revisit our options. Could a newer model provide a meaningful improvement? Or better yet could we outperform any open model by training a custom model?

As a first step, we fine-tuned a base model using data from across our platform3. We were skeptical. After all, some open models are trained on billions of examples. Could our smaller, focused dataset really compete?

Surprisingly, the results were clear: Fine-tuning on our customer support specific data outperforms other models by a significant margin.

But before we began fine-tuning, we had one important question to answer…

Which model to use as a base model?

To answer this question, we benchmarked a mix of open-weight and closed-weight models. This served two main goals:

- Assess how our current production model compares to the best publicly available alternatives.

- And, identify a strong base model for fine-tuning on our own data.

From past experience, we’ve seen that the top-performing model on general-purpose benchmarks (like MTEB) doesn’t always lead to good performance on domain-specific use cases like Fin. Hence, we shortlisted a set of models that ranked highly on various benchmarks, instead of just picking the best one. Here is the list of our shortlisted candidates.

| Model | Multilingual? | No of Parameters |

|---|---|---|

| nomic-ai/nomic-embed-text-v2-moe | Yes | 475M |

| Alibaba-NLP/gte-modernbert-base | No | 150M |

| BAAI/bge-large-en-v1.5 | No | 335M |

| NovaSearch/stella_en_400M_v5 | No | 400M |

| NovaSearch/stella_en_1.5B_v5 | No | 1.5B |

| Snowflake/snowflake-arctic-embed-l-v2.0 | Yes | 568M |

| Voyage-AI/voyage-large-3 | Yes | Unknown4 |

Our primary focus was on models under 1 billion parameters, though we included two larger ones for comparison: Stella 1.5B (1.5B parameters) and Voyage-large-3 (exact size unknown, but likely around 7B).

To evaluate the models, we created a test set of ~3,000 user queries (details in the next subsection). For each query, we included:

- Up to 3 positive examples (relevant documents)

- Exactly 10 negative examples (irrelevant documents)

A good model should consistently rank the positive examples higher than the negatives. To quantify this, we used two metrics:

- Precision: The model succeeds if all positive examples are ranked above all negatives.

- Recall@5: How many of the top 5 ranked results are positive examples?

Below are the results.

| Model | Parameters | Precision (English Only) | Recall@5 (English only) | Precision (All) | Recall@5 (All) |

|---|---|---|---|---|---|

| Voyage-Large-3 | Unknown | 54.57% | 90.79% | 55.30% | 91.53% |

| Stella 1.5B | 1.5B | 51.24% | 90.11% | 49.81%5 | 89.27% |

| Stella 400M | 400M | 48.80% | 88.11% | 43.71% | 84.85% |

| Snowflake Arctic 2 | 568M | 44.79% | 86.15% | 45.60% | 86.80% |

| Nomic MAE | 475M | 36.18% | 80.96% | 37.00% | 81.54% |

| BGE Large | 335M | 36.15% | 80.81% | 33.20% | 78.00% |

| GTE ModernBERT | 150M | 34.52% | 80.06% | 30.20% | 76.50% |

Some observations from the results:

- Several models outperformed the one that we were using in production.

- Although Voyage performs the best among the bunch establishing a strong baseline, we can’t fine-tune it as it is a closed-weight closed-source model.

- Among the open-weight models, Stella 1.5B was the best followed by Stella 400M. However, Stella uses non-standard architecture, which lacks support for training and inference tools in our stack.

- Snowflake Arctic 2 offered a strong balance between performance and practicality, while being small in number of parameters. It is built on the well-established XLM-RoBERTa architecture, having good support across the ecosystem. Additionally, it’s a strong performing multilingual model. If a fine-tuned version of Arctic performs well in production, it could significantly simplify our multilingual Fin pipeline.

Given these factors, Snowflake Arctic 2 became the clear starting point for our fine-tuning.

Note: Voyage Large is indeed large. Given the good performance of it on the benchmark, we tried to deploy it in production to establish a stronger baseline, however we had trouble scaling that model for our use case. So we dropped the idea of testing larger models in production, and focused on sub 1B models.

Training data for fine-tuning

Since the base model we use is pretty strong, we thought that just fine-tuning it on hard examples from our own customers can go a long way. As part of earlier work on improving Fin’s answer generation, we had experimented with the re-ranking of documents retrieved by our semantic search model. In that experiment, we used an LLM to assign scores to each retrieved passage and re-ordered them accordingly, sending only the highest-ranking ones to Fin. These logs turned out to be a valuable source of training data.

We mined data from ~2 million real user queries. For each query, we start with the top 40 documents returned by the search model. Then we extract

- Hard positives: Documents that were used by Fin in the answer and received a high score from the LLM-based re-ranker.

- Hard negatives: Documents that were not used by Fin in the answer and received a low score from the LLM re-ranker.

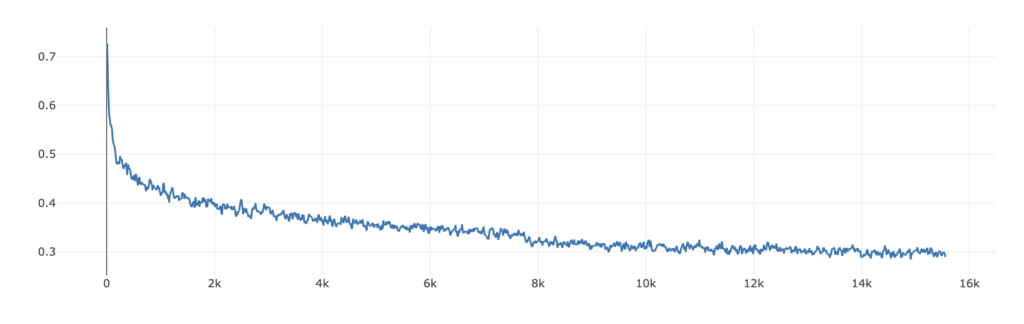

Fine-Tuning Details

With our curated set of hard positives and negatives, we fine-tuned the selected base model (Snowflake Arctic 2) using a contrastive learning approach. Specifically, we used InfoNCE loss, a commonly used objective in retrieval tasks.

For each training instance we selected:

- 1 hard positive document

- And, 4 hard negatives

InfoNCE loss function is defined as

$$ \mathscr{L} = -\log\left( \frac{\exp(s \cdot \text{sim}(q, p^+))}{\exp(s \cdot \text{sim}(q, p^+)) + \sum_{j=1}^{4} \exp(s \cdot \text{sim}(q, p_j^-))} \right) $$

This loss function tries to increase the similarity between query(q) and positive passage(p+), while pushing query and negative passages (p–j) apart.

We fine-tune the base model end-to-end for 2 epochs with an effective batch size of 256. We used AdamW optimiser with default parameters, starting learning rate 1e-5, and linear LR decay.

Does fine-tuning actually help?

Offline validation on in-domain data

To evaluate the effectiveness of our fine-tuned model, we began with offline validation using the same internal benchmark dataset described earlier. The table below compares the base model (Snowflake Arctic 2), two top-performing large models, and our fine-tuned Snowflake Arctic 2 model.

The improvement was substantial: precision increased by around 30 points, and Recall@5 increased by 10 points, outperforming even larger models.

| Model | Precision (English Only) | Recall@5 (English only) | Precision (All) | Recall@5 (All) |

|---|---|---|---|---|

| Snowflake Arctic 2 | 44.79% | 86.15% | 45.60% | 86.80% |

| Stella1.5B | 51.24% | 90.11% | 49.81% | 89.27% |

| Voyage-Large-3 | 54.57% | 90.79% | 55.30% | 91.53% |

| Snowflake Arctic Finetuned | 74.33% | 96.59% | 72.31% | 96.45% |

Out-of-distribution evaluation

To confirm that we hadn’t overfit to our in-domain data, we evaluated the models on a similar dataset created from out-of-distribution apps6. This dataset primarily included English queries, so we report overall metrics only.

| Model | Precision (All) | Recall@5 (All) |

|---|---|---|

| Snowflake Arctic 2 | 40.69% | 85.04% |

| Voyage-Large-3 | 50.53% | 90.46% |

| Snowflake Arctic Finetuned | 65.25% | 94.69% |

Performance on the out-of-distribution dataset was lower than on the in-domain benchmark, as expected, and this trend was consistent across all models. Nevertheless, the results remained strong: the fine-tuned model outperformed both the base Arctic 2 and even Voyage-Large-3 by a wide margin showing an improvement of over 20 percentage points in precision.

Reranking evaluation on held-out benchmark subset

We also validated our model on a 1,000-query subset of our benchmark dataset, where each query included 40 documents scored by an LLM. This allowed us to compute traditional IR metrics like NDCG.

| Model | NDCG@10 | NDCG@10 (out-of-distribution apps) |

|---|---|---|

| Arctic Snowflake 2 | 67.65% | 65.64% |

| Voyage-Large-3 | 71.88% | 70.63% |

| Snowflake Arctic Finetuned | 78.77% | 75.10% |

We see that on this reranking dataset also the fine-tuned model performs 10pp better than the base model. The fine-tuned model outperforms Voyage-Large by about 7pp.

FinRank Eval EN 1.0

Finally, we tested on FinRank Eval, a dedicated internal benchmark we created for in-house reranking tasks. This dataset consists of 3,000 English-only queries, each with 40 passages scored by Sonnet 3.5 and reranked using a secondary model to break ties.

| Model | MAP | Recall@5 | NDCG@5 | Recall@10 | NDCG@10 |

|---|---|---|---|---|---|

| BGE Large 1.5 | 0.4233 | 0.3286 | 0.4170 | 0.5093 | 0.4568 |

| Voyage-Large-3 | 0.5526 | 0.4721 | 0.5633 | 0.6737 | 0.6041 |

| Snowflake Arctic Finetuned | 0.6257 | 0.5421 | 0.6462 | 0.7464 | 0.6807 |

Across every metric the fine-tuned model outperforms even larger models like Voyage-Large-3.

Performance in Production: A/B Testing Results

While offline metrics gave us confidence in the fine-tuned retrieval model, users ultimately don’t care about precision, recall, or NDCG. They care whether Fin answers their question. To validate real-world impact, we ran two A/B tests comparing the fine-tuned model with our current production setup: one for English-only conversations, and another for non-English conversations.

Our primary success metric is resolution rate: the percentage of conversations Fin resolved without human intervention. A secondary metric, answer sent rate, tracks how often Fin responds with an answer instead of asking for clarification. We saw statistically significant improvements in both metrics (with p-value < 0.01), for both English and non-English conversations. We are not sharing the exact effect sizes for competitive reasons.

In both tests, we also observed that Fin cited more documents suggesting that the improved retrieval model was retrieving more useful context for answer generation. Other key metrics such as cost and latency remained stable7.

Data Security

As noted in the “How does a retrieval model work” section, each passage is independently transformed into a vector representation during indexing, without influence from any other workspace, document, or passage. At query time, we use our existing database infrastructure to only retrieve documents from the same workspace in which the user is interacting, ensuring no cross-app data access or leakage. To retrieve relevant results, the user’s query is temporarily converted into a vector, which is used solely for the retrieval process and immediately discarded after. This process ensures there is no PII leakage. Additionally, all fine-tuning is performed on our own secure AWS infrastructure, so no data is exposed to third parties.

Conclusion

Before we started this journey, we didn’t expect to outperform large models like Voyage Large, but we were curious to know how close we could get. But, from the results we see that not only we closed the gap, we surpassed it by fine-tuning on high quality curated data. At scale, in production, these improvements translate to hundreds of thousands more users getting their issues resolved without needing agent intervention.

- That is, we do not use hybrid search or incorporate traditional TF-IDF or BM25 like techniques ↩︎

- Technically speaking, we divide the document into smaller chunks called as passages ↩︎

- We only use data from the apps which allow their data to be used for training ↩︎

- 7B, Based on https://huggingface.co/voyageai/voyage-3-m-exp ↩︎

- Even though Stella1.5B is an English only model, it still performs well for multilingual cases. The base model they use Alibaba-NLP/gte-Qwen2-1.5B-instruct is multilingual ↩︎

- These are apps which didn’t allow their data to be used for training ↩︎

- This may feel counterintuitive, as we increased the number of parameters. However, thanks to the amazing Text Embeddings Inference (TEI) toolkit, we didn’t need to change our infra ↩︎